Introduction to quantum computing

Quantum computing, simply put, is a new way to process information using the laws of quantum physics - the rules that govern nature on tiny size scales. Through decades of effort in science and engineering, we’re now ready to put this quantum mechanics to work in solving problems that are exceptionally difficult for regular computers.

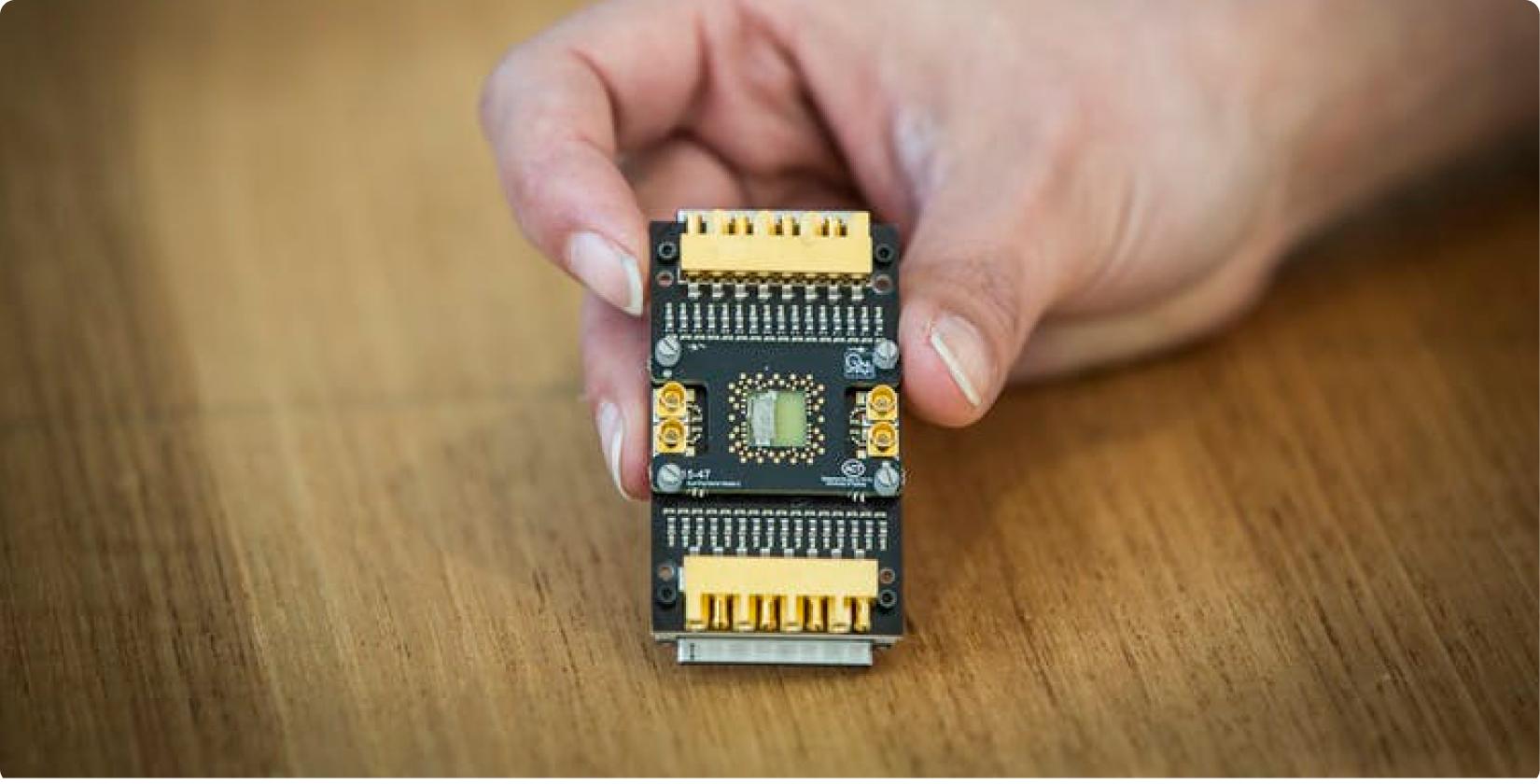

Image by Michael Biercuk.

The evolution of quantum computing started in 1994 with the discovery of Shor’s algorithm for factoring large numbers. Finding the prime factors of very big numbers — that is, the two numbers divisible only by one and themselves that can be multiplied together to reach a target — is extremely difficult. Make the number big enough and classical computers simply cannot factor them in. It would take too long (even in the millions of years) to perform all the calculations necessary to identify its primes. Public key cryptosystems — which is to say, most encryption — rely on the mathematical complexity of factoring primes to keep messages safe from prying computers.

By virtue of their approach to encoding and processing information, however, quantum computers are conjectured to be able to factor primes faster — exponentially faster — than a classical machine. A conventional computer processes numbers made up of 1’s and 0’s and can only process one number at a time; one in, one out. A quantum computer gains its advantage by creating what’s known as a “superposition” of lots of numbers at the same time and running an algorithm on this superposition. Quantum algorithm developers have come up with clever programs that “interfere” the many possible numbers with each other, such that the incorrect outputs cancel each other out and disappear and only the correct answers remain. This interference effect is analogous to how waves interfere on the surface of a pond.

Image by Jayne Ion/University of Sydney.

Figuring out how to do this is no simple task and today only a handful of quantum algorithms exist which fully exploit this quantum information capacity.

Very simple quantum demonstrations have successfully factored two-digit numbers on quantum computers, and research is now focused on how to design and operate a sufficiently large quantum computer to factor long numbers (e.g. 2048 bits). There is a very long way to go in reaching this potential — likely several decades — but a quantum computer that does it would be able to crack even highly resistant encryption. The upshot would be to render vulnerable the entire public-key encryption system, which is used effectively today not only to pass messages but to secure transactions.

Other applications in materials science and chemistry could prove equally impactful and on much shorter timescales. For instance, it is very difficult to build a computer model that represents all of the possible electron interactions in a molecule, as governed by the rules of quantum physics. As a result, it is challenging for today’s computers to calculate reaction rates, combustion, and other effects. Even using the best-known approximations, modelling relatively simple molecules on the world’s fastest computer could take longer than the age of the universe.

Quantum computing could also help develop revolutionary artificial intelligence systems. Recent efforts have demonstrated a strong and unexpected link between quantum computation and artificial neural networks, potentially portending new approaches to machine learning.

Today, small-scale machines with a couple of dozen interacting elements - called qubits - really exist in labs around the world, built from exotic materials. Some can even be accessed by the cloud. But these small-scale quantum machines are very fragile and suffer from many errors.

These early machines are just too small and too fragile to solve useful problems just yet, but we’re making great progress and getting close to quantum advantage - when it’s actually cheaper or faster to use a quantum computer for a real problem. We think that within the next 5-10 years we’ll cross that threshold with a quantum computer that isn’t that much bigger than the ones we have today. It just needs to perform much better.

And that is precisely where Q-CTRL enters - our primary focus is on helping customers extract maximum performance from quantum computing - whether in the lab or in the cloud.

Q-CTRL’S WORK IN QUANTUM COMPUTING

Quantum computing is one of the most exciting new technological applications of the 21st century. It promises to upend nearly every part of the economy, from finance through to information security. And Q-CTRL is making it actually useful by overcoming the Achilles Heel of quantum computing: hardware error.

Partially adapted from The Leap into Quantum Technology: A Primer for National Security Professionals

Foundations

Take the next step on your journey with short articles to help you understand how quantum computing and sensing will transform the world